Using LSTM Autoencoder to Detect Anomalies and Classify Rare Events

So many times, actually most of real-life data, we have unbalanced data. Data were the events in which we are interested the most are rare and not as frequent as the normal cases. As in fraud detection, for instance. Most of the data is normal cases, whether the data is already labeled or not, and we want to detect the anomalies or when the fraud happens. When dealing with unlabeled data, we usually go to “outliers detection” methods such as Isolation Forest, Cluster-Based Local Outlier Factor (CBLOF), Histogram-Based Outlier Detection (HBOS) etc. While labeled data is considered as a “classification” problem and classifiers like Support Vector Machine (SVM) and Random Forest are used.

However, given the positive data points are rare in the data, the algorithm finds it difficult to learn so much from the data. A classifier for example, usually ends up predicting “negative” for all cases to achieve the best accuracy. Here we will look at a different approach that can be used in both supervised and unsupervised anomaly detection and rare event classification problems. Long Short-Term Memory Autoencoders.

Long Short-Term Memory neural network is a special type of Recurrent neural networks. LSTMs are great in capturing and learning the intrinsic order in sequential data as they have internal memory. That’s why they are famous in speech recognition and machine translation.

On the other hand, an autoencoder can learn the lower dimensional representation of the data capturing the most important features within it. Autoencoder mainly consist of three main parts:

1) Encoder, which tries to reduce data dimensionality.

Here we will see how we can combine the two techniques to approach rare event classification. In a previous blog, we have looked at another use case of autoencoders in clustering.

Problem Description

We will use Credit Card Fraud dataset to predict frauds. The data we will use is available at this GitHub repository. Thanks to this repository, the data is prepared for modelling. Features and normalized and everything is ready for the modeling part.

Approach Description

Autoencoders try to capture the most important features and structures in data. With the help of LSTMs, it can capture the order in data, and then re-represent it in a denoised lower dimensional form. We will use this power to make the autoencoder learn the features of a normal data point only, i.e. we will train the autoencoder only with the negative/non-fraud observations. The autoencoder will try to compress the data then reconstruct it again. Upon training, the autoencoder will be able to reconstruct the normal observations with small error or difference between the original data. However, when it tries to predict/reconstruct a positive/fraud observation, it will somehow be surprised as it never saw such sequences while trained. So the reconstruction error between original and reconstructed data will be high for fraudulent ones than for normal ones. We will then set the threshold that separates the normal and the fraud observations. Things will be clearer with code. So let’s get into the real work.

N.B. This technique is useful for unsupervised anomaly detection as well.

Data Preparation

As usual we will start importing all the classes and functions we will need.

import tarfile import pandas as pd import numpy as np import matplotlib.pyplot as plt import seaborn as sns from keras.models import Input, Model from keras.layers import Dense, LSTM from keras.layers import RepeatVector, TimeDistributed from keras import optimizers from keras.callbacks import ModelCheckpoint, EarlyStopping from sklearn.model_selection import train_test_split from sklearn.metrics import confusion_matrix, precision_recall_curve from sklearn.metrics import accuracy_score, precision_score from sklearn.metrics import recall_score, auc, roc_curve np.random.seed(13)

Now we will read the downloaded data and look at the percentage of the positive class in it.

with tarfile.open("creditcardfraud_normalised.tar.gz", "r:*") as tar:

csv_path = tar.getnames()[0]

df = pd.read_csv(tar.extractfile(csv_path))

df.head()

print("class counts:\n", df["class"].value_counts())

print("\n%% of positive values in data: %.2f%%" %

((df[df["class"] == 1].shape[0] / df.shape[0]) * 100))

# class counts:

# 0 284315

# 1 492

# Name: class, dtype: int64

# % of positive values in data: 0.17%

To make the problem a little bit harder, we will make the positive observations a little bit more scarse by removing some of it randomly.

df = df.drop(df.query("`class` > 0").sample(frac = 0.25).index)

print(df["class"].value_counts())

print("\n%% of positive values in data after deleting some: %.2f%%" %

((df[df["class"] == 1].shape[0] / df.shape[0]) * 100))

predictors = df.drop(["class"], axis = 1)

target = df["class"]

# 0 284315

# 1 369

# Name: class, dtype: int64

# % of positive values in data after deleting some: 0.13%

As we have mentioned, the data is already transform, normalized and prepared for modeling, so we will now split the data into train and test.

X_train, X_test, y_train, y_test = train_test_split(

predictors, target,

stratify = target,

random_state = 13,

test_size = 0.25)

We want to train the autoencoder with negative observations only, so we willprepare the train dataset with removing all positive cases. Also from the test dataset we prepare a validation dataset that we will use while modeling and afterwards to se the threshold.

X_val = X_test[:3000] y_val = y_test[:3000] X_test = X_test[3000:] y_test = y_test[3000:] X_train_0 = X_train[y_train != 1] y_train_0 = y_train[y_train != 1] X_test_0 = X_test[y_test != 1] X_val_0 = X_val[y_val != 1] y_train_0 = y_train[y_train != 1]

LSTMs expect a 3D arrays, so we will define a function that converts 2D to 3D arrays. In addition, we will define a function that flattens a 3D array back to a 2D one. The mse_3d function will calculate the reconstruction error between the original and the reconstructed data.

def arr_reshape(x, arr_type = "float32"):

return np.asarray(x).astype(arr_type).reshape((-1, 1, x.shape[1]))

def flatten(arr):

return arr.reshape(-1, arr.shape[-1])

def mse_3d(x, y):

return np.mean(np.power(flatten(x) - flatten(y), 2), axis = 1)

We now reshape all data that the autoencoder will use.

reshaped_train_0 = arr_reshape(X_train_0) reshaped_val_0 = arr_reshape(X_val_0) reshaped_test_0 = arr_reshape(X_test_0) reshaped_val = arr_reshape(X_val) reshaped_test = arr_reshape(X_test) reshaped_train = arr_reshape(X_train)

inputs_dim = reshaped_train_0.shape[2]

Now to the fun part. The model.

We will wrap the model and all its function a Python class so that we have everything in one place.

The class methods will have default arguments with the values that we decided to use in our model. Feel free to change them if needed.

class LSTMAutoencoder(Model):

## the class is initiated with the number of dimensions and

## timesteps (the 2nd dimension in the 3 dimensions)

## and the number of dimensions to which we want the encoder

## to represent the data (bottleneck)

def __init__(self, n_dims, n_timesteps = 1,

n_bottleneck = 8, bottleneck_name = "bottleneck"):

super().__init__()

self.bottleneck_name = bottleneck_name

self.build_model(n_dims, n_timesteps, n_bottleneck)

## each of the encoder and decoder will have two layers

## with hard coded parameters for simplicity

def build_model(self, n_dims, n_timesteps, n_bottleneck):

self.inputs = Input(shape = (n_timesteps, n_dims))

e = LSTM(16, activation = "relu", return_sequences = True)(self.inputs)

## code layer or compressed form of data produced by the autoencoder

self.bottleneck = LSTM(n_bottleneck, activation = "relu",

return_sequences = False,

name = self.bottleneck_name)(e)

e = RepeatVector(n_timesteps)(self.bottleneck)

decoder = LSTM(n_bottleneck, activation = "relu",

return_sequences = True)(e)

decoder = LSTM(16, activation = "relu", return_sequences = True)(decoder)

self.outputs = TimeDistributed(Dense(n_dims))(decoder)

self.model = Model(inputs = self.inputs, outputs = self.outputs)

## model summary

def summary(self):

return self.model.summary()

## compiling the model with adam optimizer and mean squared error loss

def compile_(self, lr = 0.0003, loss = "mse", opt = "adam"):

if opt == "adam":

opt = optimizers.Adam(learning_rate = lr)

else:

opt = optimizers.SGD(learning_rate = lr)

self.model.compile(loss = loss, optimizer = opt)

## adding some model checkpoints to ensure the best values will be saved

## and early stopping to prevent the model from running in vain

def callbacks(self, **kwargs):

self.mc = ModelCheckpoint(filepath = kwargs.get("filename"),

save_best_only = True, verbose = 0)

self.es = EarlyStopping(monitor = kwargs.get("monitor"),

patience = kwargs.get("patience"))

## model fit

def train(self, x, y, x_val = None, y_val = None,

n_epochs = 15, batch_size = 32,

verbose = 1, callbacks = None):

if x_val is not None:

self.model.fit(x, y, validation_split = 0.2,

epochs = n_epochs, verbose = verbose,

batch_size = batch_size)

else:

self.model.fit(x, y, validation_data = (x_val, y_val),

epochs = n_epochs, verbose = verbose,

batch_size = batch_size,

callbacks = [self.mc, self.es])

## reconstruct the new data

## should be with lower error for negative values than

## the error with the positive ones

def predict(self, xtest):

return self.model.predict(xtest)

## after investigating the error differences, we set the threshold

def set_threshold(self, t):

self.threshold = t

## after setting the threshold, we can now predict the classes from the

## reconstructed data

def predict_class(self, x_test, predicted):

mse = mse_3d(x_test, predicted)

return 1 * (mse > self.threshold)

## in case we are interested in extracting the (bottleneck) the low-dimensional

## representation of the data

def encoder(self, x):

self.encoder_layer = Model(inputs = self.inputs, outputs = self.bottleneck)

return self.encoder_layer.predict(x)

Initiate now the model.

lstm = LSTMAutoencoder(inputs_dim) lstm.model.summary() # Model: "model" # _________________________________________________________________ # Layer (type) Output Shape Param # # ================================================================= # input_1 (InputLayer) [(None, 1, 29)] 0 # _________________________________________________________________ # lstm (LSTM) (None, 1, 16) 2944 # _________________________________________________________________ # bottleneck (LSTM) (None, 8) 800 # _________________________________________________________________ # repeat_vector (RepeatVector) (None, 1, 8) 0 # _________________________________________________________________ # lstm_1 (LSTM) (None, 1, 8) 544 # _________________________________________________________________ # lstm_2 (LSTM) (None, 1, 16) 1600 # _________________________________________________________________ # time_distributed (TimeDistri (None, 1, 29) 493 # ================================================================= # Total params: 6,381 # Trainable params: 6,381 # Non-trainable params: 0 # _________________________________________________________________

Setting the callbacks and compiling the model.

lstm.callbacks(filename = "./lstm_autoenc.h5", patience = 3, monitor = "val_loss") lstm.compile_()

The main step! Model fitting on the negative/non-fraud observations.

lstm.train(reshaped_train_0, reshaped_train_0,

reshaped_val_0, reshaped_val_0,

n_epochs = 10)

# Epoch 1/10

# 5331/5331 [==============================] - 15s 2ms/step - loss: 0.0515 - val_loss: 0.0016

# Epoch 2/10

# 5331/5331 [==============================] - 13s 2ms/step - loss: 0.0016 - val_loss: 0.0015

# ...

# ...

# Epoch 10/10

# 5331/5331 [==============================] - 13s 2ms/step - loss: 8.0738e-04 - val_loss: 7.6509e-04

Now the model seems to have kind of converged. Great! Now let’s try to set the threshold that separates the normal from the fraud cases.

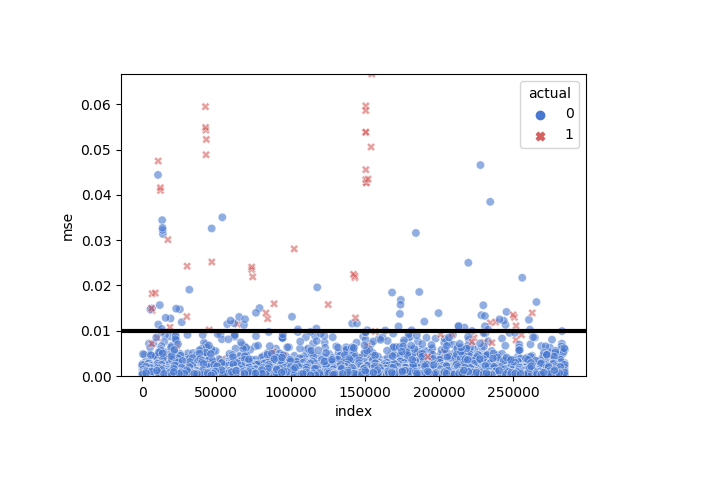

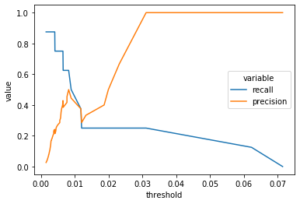

We will make the model predict/reconstruct the validation data (including the positive cases) and compute the Mean Squared Error between the reconstructed and original data. Then using the actual classes and the error values, we will calculate and plot the recall and precision and try to spot a suitable error value that gives good recall and precision.

val_preds = lstm.predict(reshaped_val)

val_mse = mse_3d(reshaped_val, val_preds)

val_error = pd.DataFrame({

"mse": val_mse,

"actual": y_val

})

precision, recall, threshold = precision_recall_curve(

val_error["actual"], val_error["mse"])

val_prt = pd.DataFrame({

"threshold": threshold,

"recall": recall[1:],

"precision": precision[1:]

})

val_prt_melted = pd.melt(val_prt, id_vars = ["threshold"],

value_vars = ["recall", "precision"])

sns.lineplot(x = "threshold", y = "value",

hue = "variable", data = val_prt_melted)

Value 0.01 seems to be a good threshold. Having a very low value, can mean that negative and positive cases are somehow so similar in essence.

lstm.set_threshold(0.01)

Now we look at how this threshold value can separate the cases in the test data. We will first reconstruct the test dataset and again compute the MSE between the reconstructed and original data.

test_preds = lstm.predict(reshaped_test)

test_mse = mse_3d(reshaped_test, test_preds)

test_error = pd.DataFrame({"mse": test_mse,

"actual": y_test})

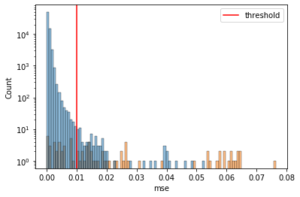

Let’s now see the distribution of the error values and how well the threshold separates the error (high) for the positive cases.

g = sns.histplot(x = "mse", hue = "actual",

data = test_error, bins = 100)

plt.axvline(lstm.threshold, color = "r", label = "threshold")

plt.legend(loc = "upper right")

g.set_yscale("log")

plt.show()

It seems that after the threshold, yes the positive cases increase while the negative ones decrease.

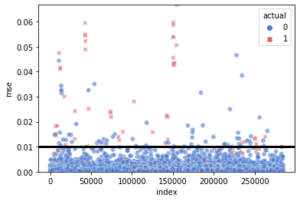

Another visualization for the threshold

g = sns.scatterplot(

x = "index", y = "mse",

hue = "actual", style = "actual",

data = test_error.reset_index(),

palette = {0: "#4878CF", 1: "#D65F5F"},

alpha = 0.6)

g.set(ylim = (0, max(test_error.mse)))

g.axhline(lstm.threshold, color = "black", linewidth = 3)

As we see, the normal cases seem to lie down the threshold and most of the fraud cases are above it.

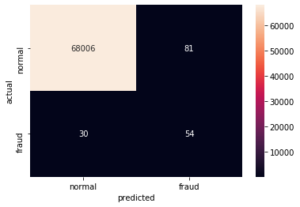

Let’s now evaluate the model performance and look at the accuracy, recall and precision as well as the confusion matrix.

pred_y = lstm.predict_class(reshaped_test, test_preds)

conf_matrix = confusion_matrix(y_test, pred_y)

print("Accuracy: %.2f%%" % (100 * accuracy_score(y_test, pred_y)))

print("Precision: %.2f%%" % (100 * precision_score(y_test, pred_y)))

print("Recall: %.2f%%" % (100 * recall_score(y_test, pred_y)))

# Accuracy: 99.84%

# Precision: 40.00%

# Recall: 64.29%

sns.heatmap(conf_matrix,

xticklabels = ["normal", "fraud"],

yticklabels=["normal", "fraud"],

annot = True, fmt = "d")

plt.ylabel("actual")

plt.xlabel("predicted")

plt.show()

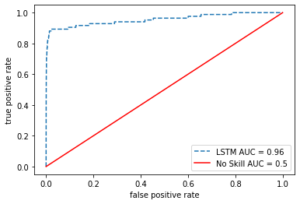

Looking at the Area Under the Curve (AUC) is also important in evaluating a classifier.

fpr, tpr, _ = roc_curve(test_error["actual"], test_error["mse"])

roc_auc = auc(fpr, tpr)

plt.plot(fpr, tpr, ls = "--", label = "LSTM AUC = %0.2f" % roc_auc)

plt.plot([0,1], [0,1], c = "r", label = "No Skill AUC = 0.5")

plt.legend(loc = "lower right")

plt.ylabel("true positive rate")

plt.xlabel("false positive rate")

plt.show()

The autoencoder with the set threshold seems to perform so well in detecting the anomalies (fraud cases).

Another classifier, like SVM or Logistic Regression, would perform better on this data. But LSTM Autoencoder outperforms them when the positive observations are so scarse in data. It is really a great tool to add to your skilset.

Leave a Reply